Added 22/07/2025

Machine Learning / regression

adversarial_regression

Datasets

Dimension

{

"x": 9,

"y": 16,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "infeasible",

"x": [0,0,0,0,0,0,0,0,0],

"F": 100.2705328714835,

"G": [],

"H": [],

"f": 0.23912969134058668,

"g": [-0.01173253,-0.00550049],

"h": []

}Dimension

{

"x": 7,

"y": 12,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 12,

"y": 22,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 8,

"y": 14,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 10,

"y": 18,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 5,

"y": 8,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 9,

"y": 16,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 9,

"y": 16,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 13,

"y": 24,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 6,

"y": 10,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 3,

"y": 4,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 6,

"y": 10,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 11,

"y": 20,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 21,

"y": 40,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}Dimension

{

"x": 12,

"y": 22,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "unknown"

}$title adversarial_regression

$onText

{description}

$offText

set i / 1*4 /;

variables obj_val_upper, obj_val_lower, x(i), y(i);

equations obj_eq_upper, obj_eq_lower

G1_upper, G2_upper, H1_upper, H2_upper,

g1_lower, g2_lower, h1_lower, h2_lower;

* Objective functions

obj_eq_upper.. obj_val_upper =e= ;

obj_eq_lower.. obj_val_lower =e= ;

* Upper-level constraints

G1_upper.. x('1') =g= 1.0;

G2_upper.. x('2') =g= -2.0;

H1_upper.. x('3') =e= 3.0;

H2_upper.. x('4') =e= -4.0;

* Lower-level constraints

g1_lower.. y('1') =g= 1.0;

g2_lower.. y('2') =g= -2.0;

h1_lower.. y('3') =e= 3.0;

h2_lower.. y('4') =e= -4.0;

* Solve

model adversarial_regression / all /;

$echo bilevel x min obj_val_lower y obj_eq_lower g1_lower g2_lower h1_lower h2_lower > "%emp.info%"

solve adversarial_regression us emp min obj_val_upper;import numpy as np

import os

import pandas as pd

# Properties

name: str = "adversarial_regression"

category: str = "machine_learning"

subcategory: str = "regression"

datasets: list = [

'regression_auto_mpg.csv',

'regression_avocado_price.csv',

'regression_boston_house_price.csv',

'regression_combined_cycle_power_plant.csv',

'regression_concrete_compressive_strength.csv',

'regression_energy_efficiency.csv',

'regression_forest_fires.csv',

'regression_insurance.csv',

'regression_liver_disorders.csv',

'regression_real_estate_valuation.csv',

'regression_toy_2_features.csv',

'regression_toy_5_features.csv',

'regression_toy_10_features.csv',

'regression_toy_20_features.csv',

'regression_wine_quality.csv',

]

paths: list = [

os.path.join('bolib3', 'data', 'regression', d) for d in datasets

]

# Parameters

rho = 0.1

delta = 0.5

y_idx = np.array([0, 1])

# Feasible point

x0 = np.zeros(9)

y0 = np.array([0.1]*16)

# Methods

def F(x, y, data):

"""

Upper-level objective function

"""

D = data["D"]

gamma = data["gamma"]

adv_labels = data["adv_labels"]

m = len(y_idx)

p = D.shape[1]

dataset = add_ones(np.concatenate((D, y.reshape((m, p)))))

labels = np.concatenate((gamma, adv_labels))

err = np.matmul(x, np.transpose(dataset)) - labels

return np.matmul(err, err) + (1/rho)*np.matmul(x, x)

def G(x, y, data=None):

"""

Upper-level inequality constraints

"""

return np.empty(0)

def H(x, y, data=None):

"""

Upper-level equality constraints

"""

return np.empty(0)

def f(x, y, data):

"""

Lower-level objective function

"""

D = data["D"]

Z = data["Z"]

m = len(y_idx)

p = D.shape[1]

err = np.matmul(x, np.transpose(add_ones(y.reshape((m, p))))) - Z

return np.matmul(err, err)

def g(x, y, data):

"""

Lower-level inequality constraints

"""

m = len(y_idx)

p = data["D"].shape[1]

y_init = data["y_init"]

return delta - np.array([cosim(y.reshape((m,p))[i], y_init.reshape((m,p))[i]) for i in range(m)])

def h(x, y, data=None):

"""

Lower-level equality constraints

"""

return np.empty(0)

def read_data(filepath=paths[0]):

"""

If the bilevel program is parameterized by data, this function should

provide code to read data file and return an appropriate python structure.

"""

df = pd.read_csv(filepath)

label_name = df.iloc[:,0].name

df[label_name] = (df[label_name] - df[label_name].min())/(df[label_name].max() - df[label_name].min())

D = df.drop(label_name, axis = 1).drop(y_idx).to_numpy()

gamma = df[label_name].drop(y_idx).to_numpy()

adv_labels = df[label_name][y_idx].to_numpy()

Z = adv_labels + np.std(gamma)

y_init = df.drop(label_name, axis = 1).loc[y_idx].to_numpy()

return {"D" : D, "gamma" : gamma, "adv_labels" : adv_labels, "Z" : Z, "y_init" : y_init}

def dimension(key='', data=None):

"""

If the argument 'key' is not specified, then:

- a dictionary mapping variable/function names (str) to the corresponding dimension (int) is returned.

If the first argument 'key' is specified, then:

- a single integer representing the dimension of the variable/function with the name {key} is returned.

"""

n = {

"x": data["D"].shape[1] + 1, # Upper-level variables

"y": len(y_idx)*(data["D"].shape[1]), # Lower-level variables

"F": 1, # Upper-level objective functions

"G": 0, # Upper-level inequality constraints

"H": 0, # Upper-level equality constraints

"f": 1, # Lower-level objective functions

"g": len(y_idx), # Lower-level inequality constraints

"h": 0, # Lower-level equality constraints

}

if key in n:

return n[key]

return n

# Extra Functions

def add_ones(dataset):

return np.concatenate((np.ones((len(dataset), 1)), dataset), 1)

def cosim(x, x0):

return (np.matmul(x, x0))/(np.sqrt(np.matmul(x, x))*np.sqrt(np.matmul(x0, x0)))

classdef adversarial_regression

%{

Comming soon

%}

properties(Constant)

name = 'adversarial_regression';

category = 'machine_learning';

subcategory = 'regression';

end

end\subsection{adversarial\_regression}

\label{subsec:adversarial_regression}

% Description

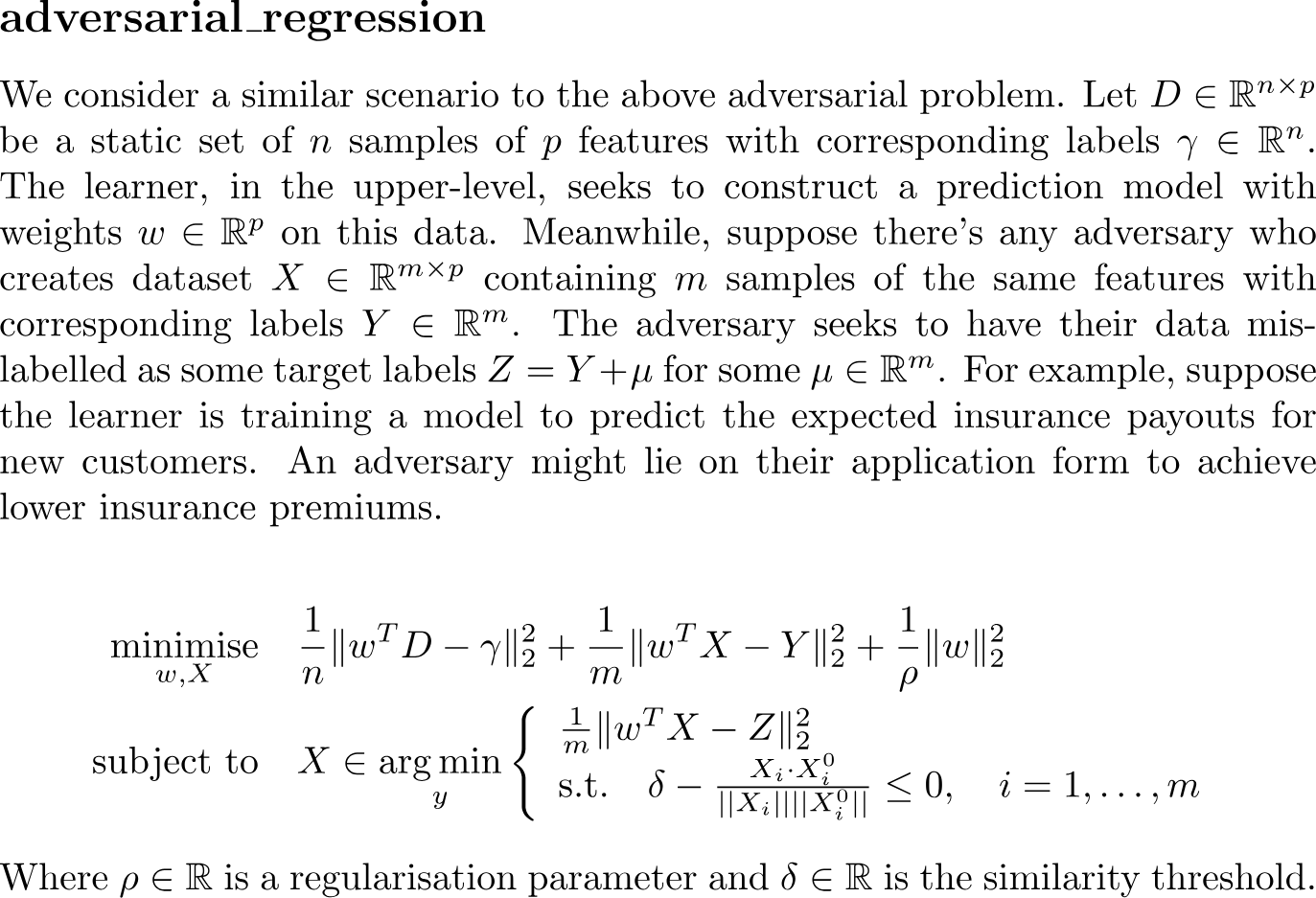

We consider a similar scenario to the above adversarial problem. Let $D \in \mathbb{R}^{n \times p}$ be a static set of $n$ samples of $p$ features with corresponding labels $\gamma \in \mathbb{R}^n$. The learner, in the upper-level, seeks to construct a prediction model with weights $w \in \mathbb{R}^p$ on this data. Meanwhile, suppose there's any adversary who creates dataset $X \in \mathbb{R}^{m \times p}$ containing $m$ samples of the same features with corresponding labels $Y \in \mathbb{R}^m$. The adversary seeks to have their data mis-labelled as some target labels $Z = Y + \mu$ for some $\mu \in \mathbb{R}^m$. For example, suppose the learner is training a model to predict the expected insurance payouts for new customers. An adversary might lie on their application form to achieve lower insurance premiums.

% Equation

\begin{flalign*}

\minimise_{w, X} \quad

& \frac{1}{n} \Vert w^TD - \gamma \Vert_2^2 + \frac{1}{m} \Vert w^TX-Y \Vert_2^2 + \frac{1}{\rho} \Vert w \Vert_2^2 \\

\subjectto \quad

& X \in \argmin_{y}

\left\{

\begin{array}{l}

\frac{1}{m} \Vert w^TX - Z \Vert_2^2 \\

\text{s.t.} \quad \delta - \frac{X_i \cdot X_i^0}{||X_i||||X_i^0||} \leq 0, \quad i = 1,\dots,m \\

\end{array}

\right.

\end{flalign*}

Where $\rho \in \mathbb{R}$ is a regularisation parameter and $\delta \in \mathbb{R}$ is the similarity threshold.