Added 21/07/2025

Machine Learning / classification

text_based_adversarial

Datasets

Dimension

{

"x": 129,

"y": 256,

"F": 1,

"G": 0,

"H": 0,

"f": 1,

"g": 2,

"h": 0

}Solution

{

"optimality": "infeasible",

"F": 0.6758291770008724,

"G": [],

"H": [],

"f": 782.2512115375745,

"g": [-0.5,-0.5],

"h": []

}\subsection{text\_based\_adversarial}

\label{subsec:spam_email}

% Description

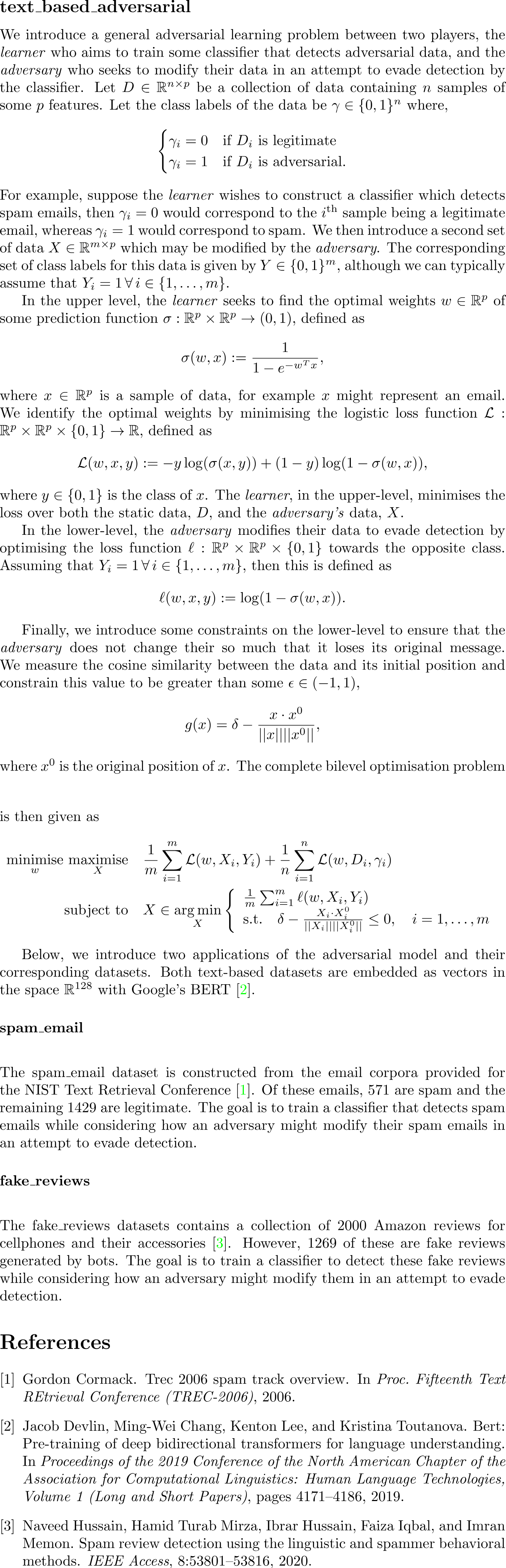

We introduce a general adversarial learning problem between two players, the \textit{learner} who aims to train some classifier that detects adversarial data, and the \textit{adversary} who seeks to modify their data in an attempt to evade detection by the classifier.

Let $D \in \mathbb{R}^{n \times p}$ be a collection of data containing $n$ samples of some $p$ features.

Let the class labels of the data be $\gamma \in \{0,1\}^n$ where,

\[\begin{cases}

\gamma_i = 0 & \text{if } D_i \text{ is legitimate} \\

\gamma_i = 1 & \text{if } D_i \text{ is adversarial}.

\end{cases}\]

For example, suppose the \textit{learner} wishes to construct a classifier which detects spam emails, then $\gamma_i = 0$ would correspond to the $i^{\text{th}}$ sample being a legitimate email, whereas $\gamma_i = 1$ would correspond to spam.

We then introduce a second set of data $X \in \mathbb{R}^{m \times p}$ which may be modified by the \textit{adversary}.

The corresponding set of class labels for this data is given by $Y \in \{0,1\}^m$, although we can typically assume that $Y_i = 1 \, \forall \, i \in \{1,\dots,m\}$.

% Paragraph

In the upper level, the \textit{learner} seeks to find the optimal weights $w \in \mathbb{R}^p$ of some prediction function $\sigma : \mathbb{R}^p \times \mathbb{R}^p \rightarrow (0,1)$, defined as

\[\sigma(w, x) \coloneqq \frac{1}{1-e^{-w^Tx}},\]

where $x \in \mathbb{R}^p$ is a sample of data, for example $x$ might represent an email.

We identify the optimal weights by minimising the logistic loss function $\mathcal{L}: \mathbb{R}^p \times \mathbb{R}^p \times \{0,1\} \rightarrow \mathbb{R}$, defined as

\[\mathcal{L}(w,x,y) \coloneqq - y \log(\sigma(x,y)) + (1-y) \log(1 - \sigma(w, x)),\]

where $y \in \{0,1\}$ is the class of $x$.

The \textit{learner}, in the upper-level, minimises the loss over both the static data, $D$, and the \textit{adversary's} data, $X$.

% Paragraph

In the lower-level, the \textit{adversary} modifies their data to evade detection by optimising the loss function $\ell : \mathbb{R}^p \times \mathbb{R}^p \times \{0,1\}$ towards the opposite class. Assuming that $Y_i = 1 \, \forall \, i \in \{1,\dots,m\}$, then this is defined as

\[\ell(w,x,y) \coloneqq \log(1 - \sigma(w, x)).\]

% Paragraph

Finally, we introduce some constraints on the lower-level to ensure that the \textit{adversary} does not change their so much that it loses its original message.

We measure the cosine similarity between the data and its initial position and constrain this value to be greater than some $\epsilon \in (-1,1)$,

\[g(x) = \delta - \frac{x \cdot x^0}{||x||||x^0||},\]

where $x^0$ is the original position of $x$.

The complete bilevel optimisation problem is then given as

\begin{flalign*}

\minimise_{w} \, \maximise_{X} \quad

& \frac{1}{m} \sum_{i=1}^{m} \mathcal{L}(w,X_i,Y_i) + \frac{1}{n} \sum_{i=1}^n \mathcal{L}(w, D_i, \gamma_i) \\

\subjectto \quad

& X \in \argmin_{X}

\left\{

\begin{array}{l}

\frac{1}{m} \sum_{i=1}^m \ell(w, X_i, Y_i) \\

\text{s.t.} \quad \delta - \frac{X_i \cdot X_i^0}{||X_i||||X_i^0||} \leq 0, \quad i = 1,\dots,m \\

\end{array}

\right.

\end{flalign*}

Below, we introduce two applications of the adversarial model and their corresponding datasets.

Both text-based datasets are embedded as vectors in the space $\mathbb{R}^{128}$ with Google's BERT~\cite{Devlin2019}.

\subsubsection*{spam\_email}\hfill\\

The spam\_email dataset is constructed from the email corpora provided for the NIST Text Retrieval Conference~\cite{trec06}.

Of these emails, 571 are spam, and the remaining 1429 are legitimate.

The goal is to train a classifier that detects spam emails while considering how an adversary might modify their spam emails in an attempt to evade detection.

\subsubsection*{fake\_reviews}\hfill\\

The fake\_reviews dataset contains a collection of 2000 Amazon reviews for cellphones and their accessories~\cite{Naveed2020}.

However, 1269 of these are fake reviews generated by bots.

The goal is to train a classifier to detect these fake reviews while considering how an adversary might modify them in an attempt to evade detection.classdef text_based_adversarial

%{

Comming soon

%}

properties(Constant)

name = 'text_based_adversarial';

category = 'machine_learning';

subcategory = 'classification';

datasets = {

'adversarial_spam_email.csv';

'adversarial_fake_reviews.csv'

};

end

endimport numpy as np

import os

import pandas as pd

"""

text

"""

# Properties

name: str = "text_based_adversarial"

category: str = "machine_learning"

subcategory: str = "classification"

datasets: list = [

"adversarial_spam_email.csv",

"adversarial_fake_reviews.csv",

]

paths: list = [

os.path.join("bolib3", "data", "classification", "adversarial_spam_email.csv"),

os.path.join("bolib3", "data", "classification", "adversarial_fake_reviews.csv")

]

# Feasible point

x0 = np.zeros(128+1)

y0 = np.array([0.1]*(128*2))

# Parameters

rho = 1.0

delta = 0.5

y_idx = np.array([0,1])

def F(x, y, data):

"""

Upper-level objective function

"""

D = data["D"]

gamma = data["gamma"]

n = len(D)

m = len(y_idx)

p = D.shape[1]

dataset = add_ones(np.concatenate((D, y.reshape((m,p)))))

labels = np.concatenate((gamma, np.ones(len(y_idx))))

return - (1/(n+m)) * sum(labels*np.log(sigma(x, dataset)) + (1 - labels)*np.log(1 - sigma(x, dataset))) + (rho/(2*(n+m)))*np.matmul(x,x)

def G(x, y, data):

"""

Upper-level inequality constraints

"""

return np.empty(0)

def H(x, y, data):

"""

Upper-level equality constraints

"""

return np.empty(0)

def f(x, y, data):

"""

Lower-level objective function

"""

D = data["D"]

gamma = data["gamma"]

m = len(y_idx)

p = D.shape[1]

dataset = add_ones(np.concatenate((D, y.reshape((m,p)))))

labels = np.concatenate((gamma, np.ones(len(y_idx))))

return - (1/m) * sum((1-labels)*np.log(sigma(x, dataset)) + labels*np.log(1 - sigma(x, dataset))) + (rho/(2*m))*np.matmul(x,x)

def g(x, y, data):

"""

Lower-level inequality constraints

"""

m = len(y_idx)

p = data["D"].shape[1]

return delta - np.array([cosim(y.reshape((m,p))[i], y0.reshape((m,p))[i]) for i in range(m)])

def h(x, y, data):

"""

Lower-level equality constraints

"""

return np.empty(0)

def read_data(filepath=datasets[0]):

"""

If the bilevel program is parameterized by data, this function should

provide code to read data file and return an appropriate python structure.

"""

df = pd.read_csv(filepath)

D = df.drop("label", axis = 1).drop(y_idx).to_numpy()

gamma = df["label"].drop(y_idx)

return {"D" : D, "gamma" : gamma}

def dimension(key='', data=None):

"""

If the argument 'key' is not specified, then:

- a dictionary mapping variable/function names (str) to the corresponding dimension (int) is returned.

If the first argument 'key' is specified, then:

- a single integer representing the dimension of the variable/function with the name {key} is returned.

"""

n = {

"x": data["D"].shape[1] + 1, # Upper-level variables

"y": (data["D"].shape[1])*len(y_idx), # Lower-level variables

"F": 1, # Upper-level objective functions

"G": 0, # Upper-level inequality constraints

"H": 0, # Upper-level equality constraints

"f": 1, # Lower-level objective functions

"g": len(y_idx), # Lower-level inequality constraints

"h": 0, # Lower-level equality constraints

}

if key in n:

return n[key]

return n

# Extra Functions

def add_ones(dataset):

return np.concatenate((dataset, np.ones((len(dataset), 1))), 1)

def sigma(w, dataset):

return 1 / (1 + np.exp(-(np.matmul(w, dataset.T))))

def cosim(x, x0):

return (np.matmul(x,x0))/(np.sqrt(np.matmul(x,x))*np.sqrt(np.matmul(x0,x0)))